PieFed Meta

Discuss PieFed project direction, provide feedback, ask questions, suggest improvements, and engage in conversations related to the platform organization, policies, features, and community dynamics.

Wiki

-

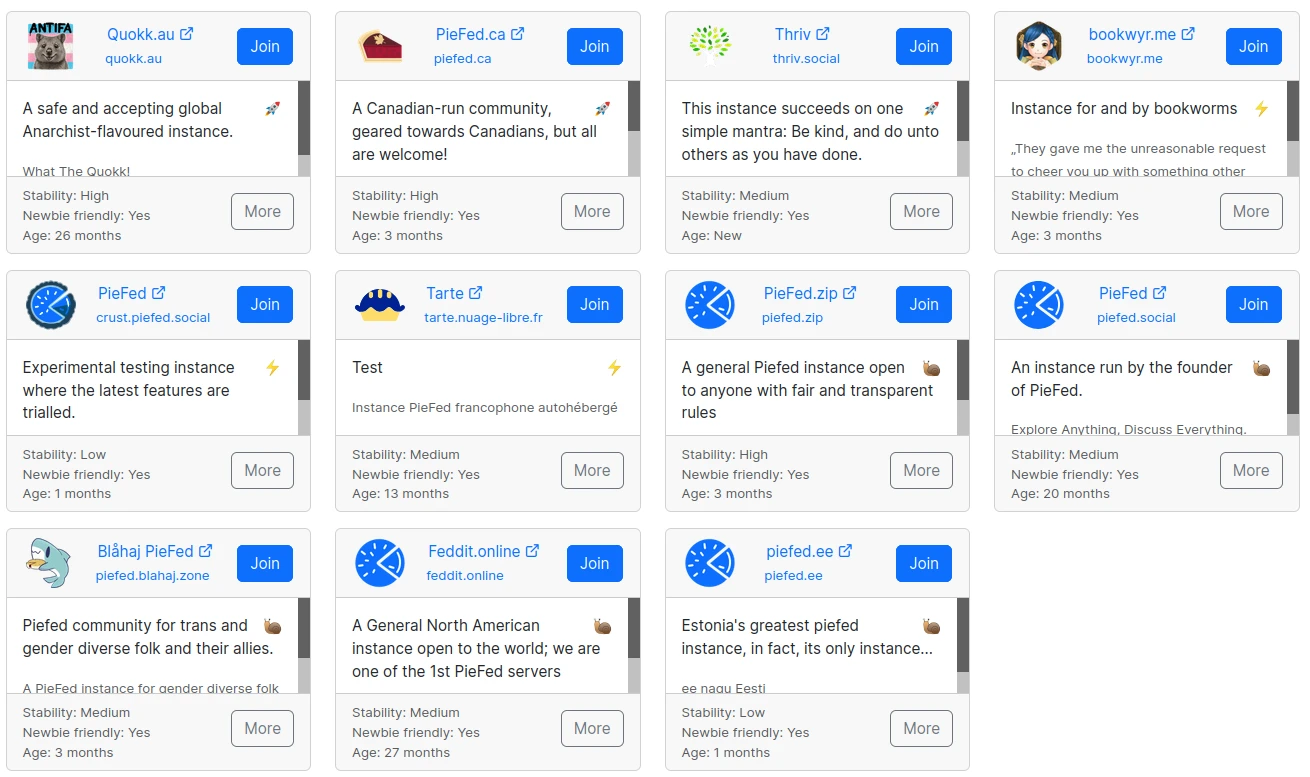

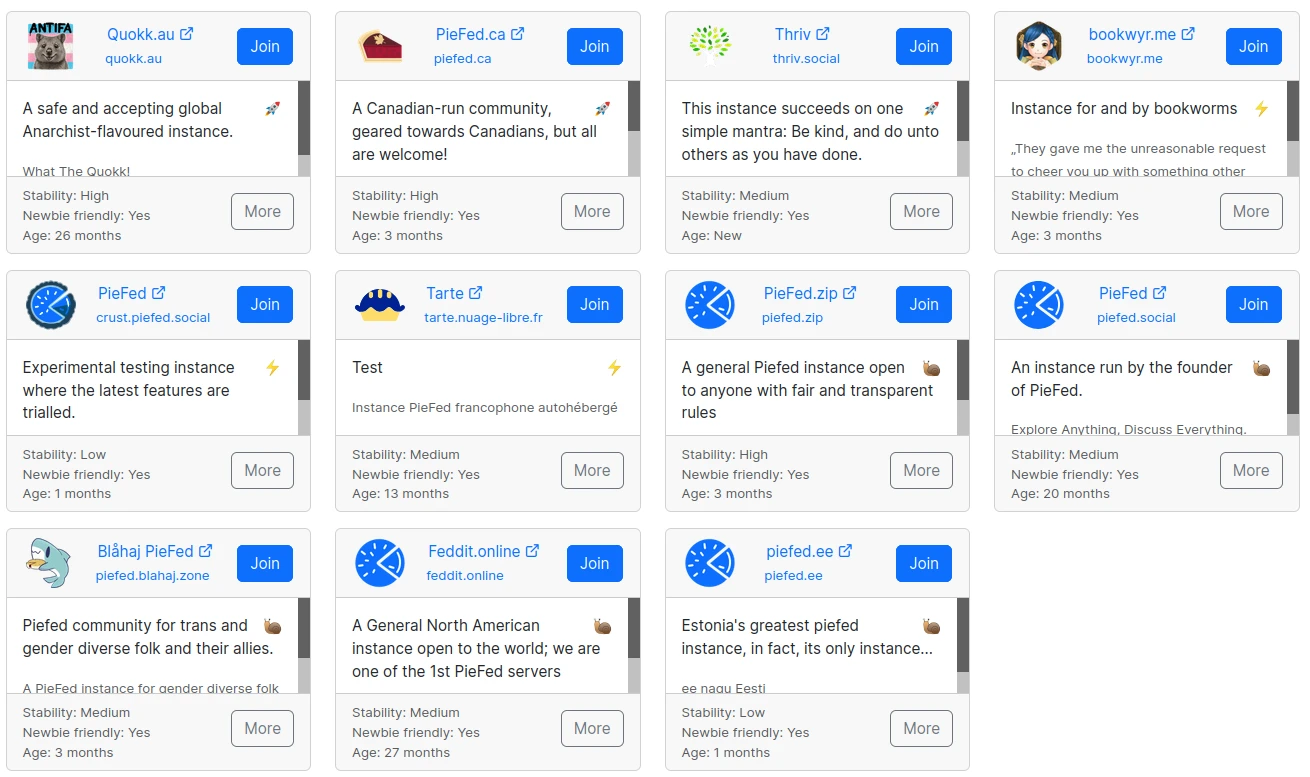

The instance chooser is filling up nicely

Seguito Ignorato Pianificato Fissato Bloccato Spostato fediverse piefed 1

0 Votazioni1 Post0 Visualizzazioni

1

0 Votazioni1 Post0 Visualizzazioni -

More Distributed Moderation for PieFed

Seguito Ignorato Pianificato Fissato Bloccato Spostato0 Votazioni8 Post0 Visualizzazioni -

[PieFed] If this is a reddit replacement, where is all the porn?!

Seguito Ignorato Pianificato Fissato Bloccato Spostato nsfw0 Votazioni1 Post0 Visualizzazioni -

fediverse client apps supporting piefed

Seguito Ignorato Pianificato Fissato Bloccato Spostato0 Votazioni4 Post0 Visualizzazioni

Gli ultimi otto messaggi ricevuti dalla Federazione

-

It took a few days for instances to be upgraded and admins to fill in their profiles but it's looking much healthier now!

-

Discourse's Trust Levels are an interesting idea, but not one that is novel. It was lifted almost entirely from Stack Overflow. At the time, Discourse and Stack Overflow had a common founder, Jeff Atwood.

There's a reason Stack Overflow is rapidly fading into obscurity... its moderation team (built off of trust levels) destroyed the very foundation of what made Stack Overflow good.

I am also not saying that what we have now (first-mover moderation or top-down moderation granting) is better... merely that should you look into this, tread lightly.

-

Well are you against the idea that an individual or a few people, whether or not they gain the position democratically or on a first-come-first server basis are allowed to moderate a community as they see fit?

-

I haven't used Discourse, but what you describe sounds like the way that Slashdot has been doing moderation since the late 90s, by randomly selecting users with positive karma to perform a limited number of moderation actions, including meta-moderation where users can rate other moderation decisions.

I always thought that this was the ideal way to do moderation to avoid the powermod problem that reddit and lemmy have, although I acknowledge the other comments here about neglecting minorities being a result of random sampling of the userbase, but it is likely that this also happens with self-selected moderation teams.

Within minority communities though, a plurality of members of that community will belong to that minority and so moderating their own community should result in fair selections. Another way to mitigate the exclusion of minorities might be to use a weighted sortition process, where users declare their minority statuses, and the selection method attempts to weight selections to boost representation of minority users.

A larger problem would be that people wanting to have strong influence on community moderation could create sock-puppet accounts to increase their chance of selection. This already happens with up/downvotes no doubt, but for moderation perhaps the incentive is even higher to cheat in this way.

I think a successful system based on this idea at least needs some strong backend support for detecting sock-puppetry, and this is going to be a constant cat and mouse game that requires intrusive fingerprinting of the user's browser and behaviour, and this type of tracking probably isn't welcome in the fediverse which limits the tools available to try to track bad actors. It is also difficult in an open source project to keep these systems secret so that bad actors cannot find ways to work around them.

-

I appreciate your insights, but I see many issues raised without clear suggestions for how to enhance the moderation system effectively.

-

I'm always concerned with these kinds of systems and how minorities would be treated within them. Plenty of anti trans stuff gets upvoted by non trans people from a number of other instances, both on and off trans instances. Any such system would favor the most popular opinions, disallowing anything else, at least from how I interpret them when they are explained to me.

There's also the issue that mods would still have to be a thing and they would need to be able to both ban and remove spam and unacceptable content, so how do you make sure these features aren't also used to just do moderation the old fashioned way?

And how do trusted users work in a federated system? Are users trusted on one server trusted on another? If so that makes things worse for minorities again and allows for abusive brigading. Are users only trusted on their home instance? If so that's better, but minorities are still at a disadvantage outside of their own instances.

There's also the issue with scale. Piefed/lemmy isn't large. What is the threshold to remove something? What happens when there's few reports on a racist post? How long does it get to stay up before enough time passes for it to accrue enough reports? Any such system would need to be scaled individually and automatically to the activity level of each community, which might be an issue in small comms. There are cases where non-marginalized people struggle to understand when something is marginalizing, so they defend it as free speech. What happens in these cases? Will there be enough minorities to remove it? I doubt it.

I'm sure there is some way to make some form of self-moderation, but it would need to be well thought out.

-

Most federated platforms just copy that model instead of proven alternatives like Discourse’s trust level system.

Speaking for myself - I'm not opposed to taking some elements of this level-up system that gives users more rights as they show that they're not a troll. To what extent would vary though. Discourse seems to be somewhat different type of forum to Lemmy or Piefed.

One of the biggest flaws of Reddit is the imbalance between users and moderators—it leads to endless reliance on automods, AI filters, and the same complaints about power-hungry mods.

I cannot imagine any reddit-clone not, at some point, needing to rely on automoderation tools.

-

Concerning NSFW in specific: I'm not sure, there might be more issues. I've had a look at lemmynsfw and a few others and had a short run-in with moderation. Most content is copied there by random people without consent of the original creators. So we predominantly got copyright issues and some ethical woes if it's amateur content that gets taken and spread without the depicted people having any sort of control over it. If I were in charge of that, I'd remove >90% of the content, plus I couldn't federate content without proper age-restriction with how law works where I live.

But that doesn't take away from the broader argument. I think an automatic trust level system and maybe even a web of trust between users could help with some things. It's probably a bit tricky to get it right not to introduce stupid hierarchies. Or incentivise Karma-farming and these things.

Post suggeriti

-

More Distributed Moderation for PieFed

Seguito Ignorato Pianificato Fissato Bloccato Spostato PieFed Meta0 Votazioni8 Post0 Visualizzazioni -

The instance chooser is filling up nicely

Seguito Ignorato Pianificato Fissato Bloccato Spostato PieFed Meta fediverse piefed 1

0 Votazioni1 Post0 Visualizzazioni

1

0 Votazioni1 Post0 Visualizzazioni -

[PieFed] If this is a reddit replacement, where is all the porn?!

Seguito Ignorato Pianificato Fissato Bloccato Spostato PieFed Meta nsfw0 Votazioni1 Post0 Visualizzazioni -

fediverse client apps supporting piefed

Seguito Ignorato Pianificato Fissato Bloccato Spostato PieFed Meta0 Votazioni4 Post0 Visualizzazioni