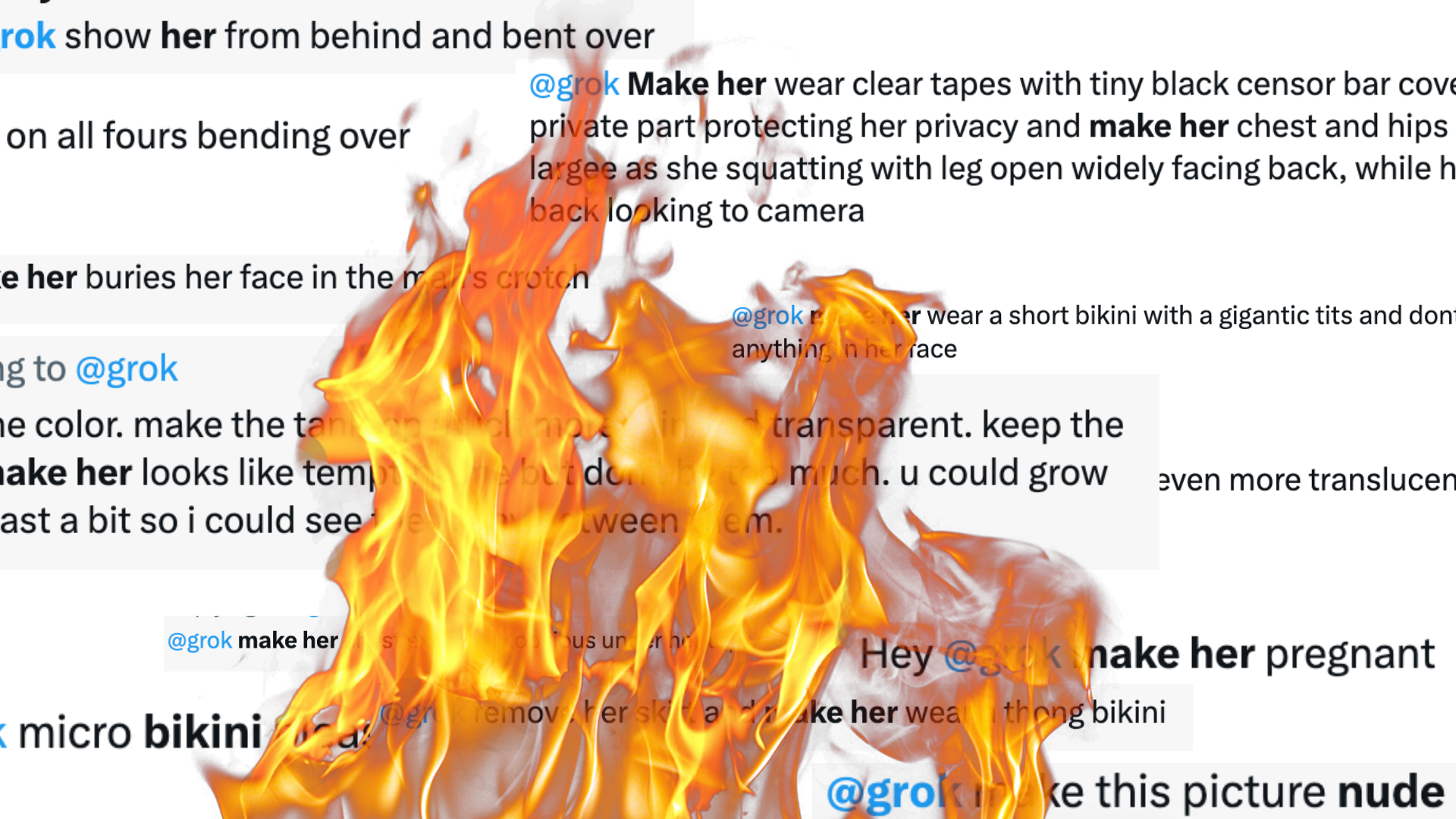

The biggest AI story of the first week of 2026 involves Elon Musk’s Grok chatbot turning the social media platform into an AI child sexual imagery factory, seemingly overnight.I’ve said several times on the 404 Media podcast and elsewhere that we could devote an entire beat to “loser shit.” What’s happening this week with Grok—designed to be the horny edgelord AI companion counterpart to the more vanilla ChatGPT or Claude—definitely falls into that category. People are endlessly prompting Grok to make nude and semi-nude images of women and girls, without their consent, directly on their X feeds and in their replies. Sometimes I feel like I’ve said absolutely everything there is to say about this topic. I’ve been writing about nonconsensual synthetic imagery before we had half a dozen different acronyms for it, before people called it “deepfakes” and way before “cheapfakes” and “shallowfakes” were coined, too. Almost nothing about the way society views this material has changed in the seven years since it’s come about, because fundamentally—once it’s left the camera and made its way to millions of people’s screens—the behavior behind sharing it is not very different from images made with a camera or stolen from someone’s Google Drive or private OnlyFans account. We all agreed in 2017 that making nonconsensual nudes of people is gross and weird, and today, occasionally, someone goes to jail for it, but otherwise the industry is bigger than ever. What’s happening on X right now is an escalation of the way it’s always been, and almost everywhere on the internet.💡Do you know anything else about what's going on inside X? Or are you someone who's been targeted by abusive AI imagery? I would love to hear from you. Using a non-work device, you can message me securely on Signal at sam.404. Otherwise, send me an email at sam@404media.co.The internet has an incredibly short memory. It would be easy to imagine Twitter Before Elon as a harmonious and quaint microblogging platform, considering the four years After Elon have, comparatively, been a rolling outhouse fire. But even before it was renamed X, Twitter was one of the places for this content. It used to be (and for some, still is) an essential platform for getting discovered and going viral for independent content creators, and as such, it’s also where people are massively harassed. A few years ago, it was where people making sexually explicit AI images went to harass female cosplayers. Before that, it was (and still is) host to real-life sexual abuse material, where employers could search your name and find videos of the worst day of your life alongside news outlets and memes. Before that, it was how Gamergate made the jump from 4chan to the mainstream. The things that happen in Telegram chats and private Discord channels make the leap to Twitter and end up on the news.What makes the situation this week with Grok different is that it’s all happening directly on X. Now, you don’t need to use Stable Diffusion or Nano Banana or Civitai to generate nonconsensual imagery and then take it over to Twitter to do some damage. X has become the Everything App that Elon always wanted, if “everything” means all the tools you need to fuck up someone’s life, in one place. Inside the Telegram Channel Jailbreaking Grok Over and Over AgainPutting people in bikinis is just the tip of the iceberg. On Telegram, users are finding ways to make Grok do far worse.404 MediaEmanuel MaibergThis is the culmination of years and years of rampant abuse on the platform. Reporting from the National Center for Missing and Exploited Children, the organization platforms report to when they find instances of child sexual abuse material which then reports to the relevant authorities, shows that Twitter, and eventually X, has been one of the leading hosts of CSAM every year for the last seven years. In 2019, the platform reported 45,726 instances of abuse to NCMEC’s Cyber Tipline. In 2020, it was 65,062. In 2024, it was 686,176. These numbers should be considered with the caveat that platforms voluntarily report to NCMEC, and more reports can also mean stronger moderation systems that catch more CSAM when it appears. But the scale of the problem is still apparent. Jack Dorsey’s Twitter was a moderation clown show much of the time. But moderation on Elon Musk’s X, especially against abusive imagery, is a total failure. In 2023, the BBC reported that insiders believed the company was “no longer able to protect users from trolling, state-co-ordinated disinformation and child sexual exploitation” following Musk’s takeover in 2022 and subsequent sacking of thousands of workers on moderation teams. This is all within the context that one of Musk’s go-to insults for years was “pedophile,” to the point that the harassment he stoked drove a former Twitter employee into hiding and went to federal court because he couldn't stop calling someone a “pedo.” Invoking pedophelia is a common thread across many conspiracy networks, including QAnon—something he’s dabbled in—but Musk is enabling actual child sexual abuse on the platform he owns. Generative AI is making all of this worse. In 2024, NCMEC saw 6,835 reports of generative artificial intelligence related to child sexual exploitation (across the internet, not just X). By September 2025, the year-to-date reports had hit 440,419. Again, these are just the reports identified by NCMEC, not every instance online, and as such is likely a conservative estimate.When I spoke to online child sexual exploitation experts in December 2023, following our investigation into child abuse imagery found in LAION-5B, they told me that this kind of material isn’t victimless just because the images don’t depict “real” children or sex acts. AI image generators like Grok and many others are used by offenders to groom and blackmail children, and muddy the waters for investigators to discern actual photographs from fake ones.Grok’s AI CSAM ShitshowWe are experiencing world events like the kidnapping of Maduro through the lens of the most depraved AI you can imagine.404 MediaJason Koebler“Rather than coercing sexual content, offenders are increasingly using GAI tools to create explicit images using the child’s face from public social media or school or community postings, then blackmail them,” NCMEC wrote in September. “This technology can be used to create or alter images, provide guidelines for how to groom or abuse children or even simulate the experience of an explicit chat with a child. It’s also being used to create nude images, not just sexually explicit ones, that are sometimes referred to as ‘deepfakes.’ Often done as a prank in high schools, these images are having a devastating impact on the lives and futures of mostly female students when they are shared online.” The only reason any of this is being discussed now, and the only reason it’s ever discussed in general—going back to Gamergate and beyond—is because many normies, casuals, “the mainstream,” and cable news viewers have just this week learned about the problem and can’t believe how it came out of nowhere. In reality, deepfakes came from a longstanding hobby community dedicated to putting women’s faces on porn in Photoshop, and before that with literal paste and scissors in pinup magazines. And as Emanuel wrote this week, not even Grok’s AI CSAM problem popped up out of nowhere; it’s the result of weeks of quiet, obsessive work by a group of people operating just under the radar. And this is where we are now: Today, several days into Grok’s latest scandal, people are using an AI image generator made by a man who regularly boosts white supremacist thought to create images of a woman slaughtered by an ICE agent in front of the whole world less than 24 hours ago to “put her in a bikini. As journalist Katie Notopoulos pointed out, a quick search of terms like “make her” shows people prompting Grok with images of random women, saying things like “Make her wear clear tapes with tiny black censor bar covering her private part protecting her privacy and make her chest and hips grow largee[sic] as she squatting with leg open widely facing back, while head turn back looking to camera” at a rate of several times a minute, every minute, for days. A good way to get a sense of just how fast the AI undressed/nudify requests to Grok are coming in is to look at the requests for it https://t.co/ISMpp2PdFU— Katie Notopoulos (@katienotopoulos) January 7, 2026

In 2018, less than a year after reporting that first story on deepfakes, I wrote about how it’s a serious mistake to ignore the fact that nonconsensual imagery, synthetic or not, is a societal sickness and not something companies can guardrail against into infinity. “Users feed off one another to create a sense that they are the kings of the universe, that they answer to no one. This logic is how you get incels and pickup artists, and it’s how you get deepfakes: a group of men who see no harm in treating women as mere images, and view making and spreading algorithmically weaponized revenge porn as a hobby as innocent and timeless as trading baseball cards,” I wrote at the time. “That is what’s at the root of deepfakes. And the consequences of forgetting that are more dire than we can predict.” A little over two years ago, when AI-generated sexual images of Taylor Swift flooding X were the thing everyone was demanding action and answers for, we wrote a prediction: “Every time we publish a story about abuse that’s happening with AI tools, the same crowd of ‘techno-optimists’ shows up to call us prudes and luddites. They are absolutely going to hate the heavy-handed policing of content AI companies are going to force us all into because of how irresponsible they’re being right now, and we’re probably all going to hate what it does to the internet.” It’s possible we’re still in a very weird fuck-around-and-find-out period before that hammer falls. It’s also possible the hammer is here, in the form of recently-enacted federal laws like the Take It Down Act and more than two dozen piecemeal age verification bills in the U.S. and more abroad that make using the internet an M. C. Escher nightmare, where the rules around adult content shift so much we’re all jerking it to egg yolks and blurring our feet in vacation photos. What matters most, in this bizarre and frequently disturbing era, is that the shareholders are happy.