This study—from Anthropic, no less—is rather damning of the entire generative AI project.

-

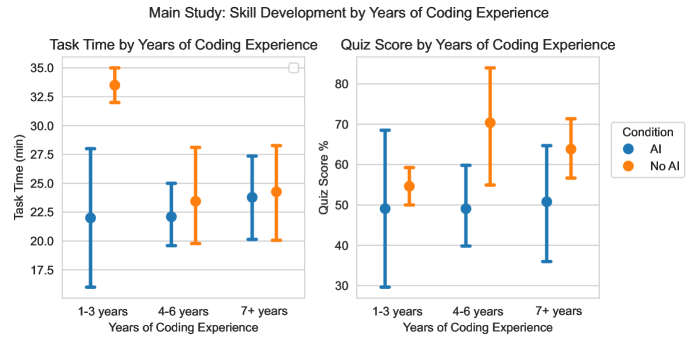

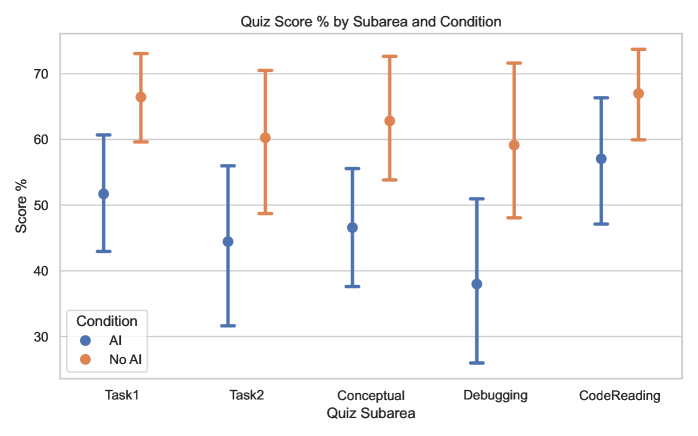

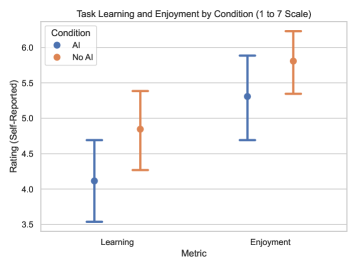

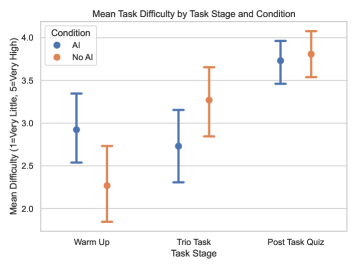

This study—from Anthropic, no less—is rather damning of the entire generative AI project. In code creation, the realm where it should shine, not only were the time gains marginal, but developers understood their code far, far less. And they didn't even have more fun doing the work!

But to me the most concerning part of this study is the fact that Anthropic could not get the control (non-AI) group to comply. Up to 35% of the "control" in the initial studies used AI tools despite instructions not to. What kind of behavior does that sound like?

-

This study—from Anthropic, no less—is rather damning of the entire generative AI project. In code creation, the realm where it should shine, not only were the time gains marginal, but developers understood their code far, far less. And they didn't even have more fun doing the work!

But to me the most concerning part of this study is the fact that Anthropic could not get the control (non-AI) group to comply. Up to 35% of the "control" in the initial studies used AI tools despite instructions not to. What kind of behavior does that sound like?

@mttaggart I’m not that smart, MT… what kind of behavior (what’s the answer)?

-

@mttaggart I’m not that smart, MT… what kind of behavior (what’s the answer)?

@scottwilson Addictive! It's not even an analogy anymore; generative AI is an addictive substance that causes cognitive decline. If you cannot stop yourself from using it even when compelled to for a scientific study, seems like you have a dependency.

-

This study—from Anthropic, no less—is rather damning of the entire generative AI project. In code creation, the realm where it should shine, not only were the time gains marginal, but developers understood their code far, far less. And they didn't even have more fun doing the work!

But to me the most concerning part of this study is the fact that Anthropic could not get the control (non-AI) group to comply. Up to 35% of the "control" in the initial studies used AI tools despite instructions not to. What kind of behavior does that sound like?

What’s the problem with that? If they fire all the engineers, then they’ll be nobody to be confused.

Problem solved!

-

@scottwilson Addictive! It's not even an analogy anymore; generative AI is an addictive substance that causes cognitive decline. If you cannot stop yourself from using it even when compelled to for a scientific study, seems like you have a dependency.

@mttaggart Ah, now I get it. Dang.

-

@scottwilson Addictive! It's not even an analogy anymore; generative AI is an addictive substance that causes cognitive decline. If you cannot stop yourself from using it even when compelled to for a scientific study, seems like you have a dependency.

@mttaggart @scottwilson I can attest to that. I’m not a coder, but a marketer who got caught up in the pressure and ended up using AI about 1-2 times a week for a year.

I quit end of June last year, and I really do mean “quit” because I feel like an addict in recovery counting down the months I’ve been sober. I had very severe cravings to touch the chatbots when I stopped, and I still feel them at least once a month now.

The itch is “this task is hard and frustrating, what if I just asked AI to help me just this once?”. It’s gotten much easier to ignore but god. I didn’t even use this stuff everyday or like it at any point!

-

@mttaggart @scottwilson I can attest to that. I’m not a coder, but a marketer who got caught up in the pressure and ended up using AI about 1-2 times a week for a year.

I quit end of June last year, and I really do mean “quit” because I feel like an addict in recovery counting down the months I’ve been sober. I had very severe cravings to touch the chatbots when I stopped, and I still feel them at least once a month now.

The itch is “this task is hard and frustrating, what if I just asked AI to help me just this once?”. It’s gotten much easier to ignore but god. I didn’t even use this stuff everyday or like it at any point!

@mariyadelano @scottwilson Thank you for sharing this experience, and I'm really impressed with your resolve!

-

@mariyadelano @scottwilson Thank you for sharing this experience, and I'm really impressed with your resolve!

@mttaggart @scottwilson thank you for such a good post summarizing the Anthropic study - I was trying to share the paper with some folks earlier, but they aren’t used to reading academic docs, so your post has been a much easier distillation to send them

-

This study—from Anthropic, no less—is rather damning of the entire generative AI project. In code creation, the realm where it should shine, not only were the time gains marginal, but developers understood their code far, far less. And they didn't even have more fun doing the work!

But to me the most concerning part of this study is the fact that Anthropic could not get the control (non-AI) group to comply. Up to 35% of the "control" in the initial studies used AI tools despite instructions not to. What kind of behavior does that sound like?

RE: https://hachyderm.io/@jenniferplusplus/115988258931946578

There is a thread where @jenniferplusplus showed that this is well intentioned poorly designed experiment: https://agilealliance.social/@jenniferplusplus@hachyderm.io/115988258929755734

Please spread the word

-

@scottwilson Addictive! It's not even an analogy anymore; generative AI is an addictive substance that causes cognitive decline. If you cannot stop yourself from using it even when compelled to for a scientific study, seems like you have a dependency.

@mttaggart @scottwilson this is one of the reasons I dread when management (probably soon) introduces an AI mandate where I work. I have OCD. I also have a history of addiction (25 years sober soon!), and multiple family members who've struggled with addiction. I *know* that I am vulnerable to this. That's the reason I've strictly avoided *any* kind of gambling throughout my life; I know I have exactly the sort of mind that would make me vulnerable to becoming a zombie chained to a slot machine.

Soon, I will likely be facing orders to become a zombie chained to a slop machine.

-

RE: https://hachyderm.io/@jenniferplusplus/115988258931946578

There is a thread where @jenniferplusplus showed that this is well intentioned poorly designed experiment: https://agilealliance.social/@jenniferplusplus@hachyderm.io/115988258929755734

Please spread the word

@mlevison @mttaggart @jenniferplusplus I was trying to find that thread so I could link it here, but this specific post calls out a huge flaw that assuredly helps explain why the control group used AI tools: https://hachyderm.io/@jenniferplusplus/115993972337118404

-

undefined oblomov@sociale.network shared this topic

undefined oblomov@sociale.network shared this topic