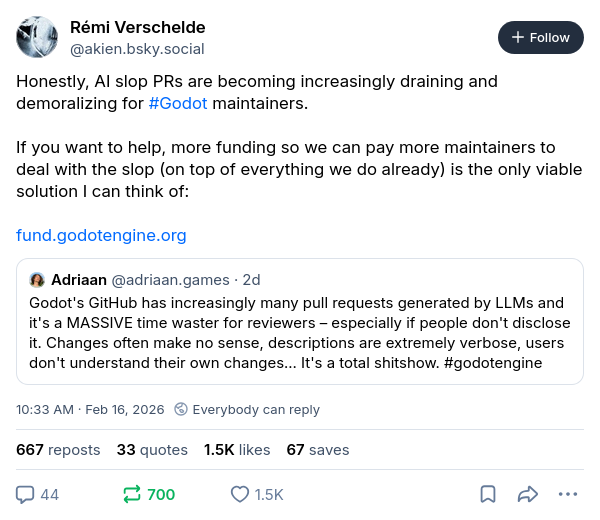

At this point, open-source development itself is being DDoS'ed by LLMs and their human users.

-

At this point, open-source development itself is being DDoS'ed by LLMs and their human users.

At the risk of being a bit gross: this is the software development version of peeing in the pool. If *one* person does it, it's gross but will probably go unnoticed. However, at this point, it's like having 100 people all lined up on the side of the pool peeing into it in unison. I don't really want to swim in that, do you? And now they've started eyeing the punchbowl and watercoolers too. #AI #AIslop #LLMs

-

undefined cwebber@social.coop shared this topic

undefined cwebber@social.coop shared this topic

-

At this point, open-source development itself is being DDoS'ed by LLMs and their human users.

At the risk of being a bit gross: this is the software development version of peeing in the pool. If *one* person does it, it's gross but will probably go unnoticed. However, at this point, it's like having 100 people all lined up on the side of the pool peeing into it in unison. I don't really want to swim in that, do you? And now they've started eyeing the punchbowl and watercoolers too. #AI #AIslop #LLMs

@jzb do we perhaps need an open-source project to create slop-detectors? If they caught even a fraction of the submissions, they'd probably get 99% of the authors. For us to ban.

-

@jzb do we perhaps need an open-source project to create slop-detectors? If they caught even a fraction of the submissions, they'd probably get 99% of the authors. For us to ban.

-

@jzb do we perhaps need an open-source project to create slop-detectors? If they caught even a fraction of the submissions, they'd probably get 99% of the authors. For us to ban.

@davecb Perhaps. Rather than turning it into another spam-type arms race, though, it would be vastly better if humans would exercise some restraint in their use of such tools.

The fact that humans are unlikely to do so suggests that it is wildly irresponsible it is to keep churning out such tools for mass consumption.

-

@elmiko For now. As I said to @davecb, it will just turn into another spam-type arms race. A technological advance in detecting slop will just lead to technological advances in trying to make slop undetectable. And on and on. We've seen this story before with spam, adware, phishing, etc.

The problem isn't LLMs. It's how people are going to use them. If the evidence is that people will use them irresponsibly, then it's irresponsible to keep advancing the technology and promoting it, IMO.

Perhaps -- maybe -- fans of LLMs will see the maintainers of open-source projects that they care about complaining about the slop deluge and stop throwing slop over the walls, and tell their fellow fans that they should cool it. I'm not optimistic on that point, though, because all evidence so far points to LLM-user culture being one of disrespect for people outside the LLM bubble and flagrant disregard for the concept of consent.

The attitude seems to be "we like this, and so should you, and we're not going to respect your wishes. Now shut up and consume the slop we serve you." I'd love to be proven wrong on this.

-

@davecb Perhaps. Rather than turning it into another spam-type arms race, though, it would be vastly better if humans would exercise some restraint in their use of such tools.

The fact that humans are unlikely to do so suggests that it is wildly irresponsible it is to keep churning out such tools for mass consumption.

@jzb I agree. Alas, a likely way we stop the churning is also called "the next recession". Last year I de-risked my whole portfolio.

I'd genuinely like to see a fix at the supply end, with everyone shunning the owners of the slop factories, but all I could think of was a way to allow projects to work at the demand end, shunning individual slop-producers (:-() -

@elmiko For now. As I said to @davecb, it will just turn into another spam-type arms race. A technological advance in detecting slop will just lead to technological advances in trying to make slop undetectable. And on and on. We've seen this story before with spam, adware, phishing, etc.

The problem isn't LLMs. It's how people are going to use them. If the evidence is that people will use them irresponsibly, then it's irresponsible to keep advancing the technology and promoting it, IMO.

Perhaps -- maybe -- fans of LLMs will see the maintainers of open-source projects that they care about complaining about the slop deluge and stop throwing slop over the walls, and tell their fellow fans that they should cool it. I'm not optimistic on that point, though, because all evidence so far points to LLM-user culture being one of disrespect for people outside the LLM bubble and flagrant disregard for the concept of consent.

The attitude seems to be "we like this, and so should you, and we're not going to respect your wishes. Now shut up and consume the slop we serve you." I'd love to be proven wrong on this.

@jzb @davecb i agree, it's a human problem not a technical problem.

> The attitude seems to be "we like this, and so should you, and we're not going to respect your wishes. Now shut up and consume the slop we serve you." I'd love to be proven wrong on this.

i also would love for you to be wrong, but i fear not. humans are not good at self-regulating nor self-reflecting.

-

@jzb I agree. Alas, a likely way we stop the churning is also called "the next recession". Last year I de-risked my whole portfolio.

I'd genuinely like to see a fix at the supply end, with everyone shunning the owners of the slop factories, but all I could think of was a way to allow projects to work at the demand end, shunning individual slop-producers (:-()@davecb Yeah... I need to find a way to park my 401K funds in something recession-proof. I wish there was a way to just say "pull this out of circulation for a bit and just give me normal savings interest."

401Ks were a great way for capital to force *everyone* to let businesses play games with *everyone else's money* while also letting businesses abandon the practice of pensions.

Sorry - that's a whole different rant than AI slop. I'm also fun at parties.

(That's a lie. I don't go to parties.)

-

@jzb @davecb i agree, it's a human problem not a technical problem.

> The attitude seems to be "we like this, and so should you, and we're not going to respect your wishes. Now shut up and consume the slop we serve you." I'd love to be proven wrong on this.

i also would love for you to be wrong, but i fear not. humans are not good at self-regulating nor self-reflecting.

@elmiko Sometimes. Not often, but sometimes, I kind of envy those types of people. It must be so easy to go through life not giving a damn about these things.

-

@elmiko Sometimes. Not often, but sometimes, I kind of envy those types of people. It must be so easy to go through life not giving a damn about these things.

@jzb i dream about my tiny house on the mountain away from society far too frequently these days. i feel my partner would be upset though XP

-

@davecb Yeah... I need to find a way to park my 401K funds in something recession-proof. I wish there was a way to just say "pull this out of circulation for a bit and just give me normal savings interest."

401Ks were a great way for capital to force *everyone* to let businesses play games with *everyone else's money* while also letting businesses abandon the practice of pensions.

Sorry - that's a whole different rant than AI slop. I'm also fun at parties.

(That's a lie. I don't go to parties.)

@jzb Does the US have GICs (Guaranteed Investment Certificates)? With only 5 *big* banks in Canada, they're pretty safe unless we have a full-fledged depression.

-

@jzb Does the US have GICs (Guaranteed Investment Certificates)? With only 5 *big* banks in Canada, they're pretty safe unless we have a full-fledged depression.

@jzb Of course, Scotiabank has US offices, as do the Bank of Montreal and the Toronto-Dominion Bank. The Royal Bank of Canada owns Citibank. That assumes you can smuggle it out of a 401k (:-))

If you can't, see if you can use US certificates of deposit. An American friend just bought a bunch

-

undefined valhalla@social.gl-como.it shared this topic

undefined valhalla@social.gl-como.it shared this topic

-

At this point, open-source development itself is being DDoS'ed by LLMs and their human users.

At the risk of being a bit gross: this is the software development version of peeing in the pool. If *one* person does it, it's gross but will probably go unnoticed. However, at this point, it's like having 100 people all lined up on the side of the pool peeing into it in unison. I don't really want to swim in that, do you? And now they've started eyeing the punchbowl and watercoolers too. #AI #AIslop #LLMs

@jzb it's worse: we have built hundreds of machines peeing in the pool. And their reservoir of pee is infinite.

-

undefined oblomov@sociale.network shared this topic

undefined oblomov@sociale.network shared this topic

undefined swelljoe@mas.to shared this topic

undefined swelljoe@mas.to shared this topic

(Ho come vicini di negozio un tatuatore e un parrucchiere quindi sarà anche facile!)

(Ho come vicini di negozio un tatuatore e un parrucchiere quindi sarà anche facile!)